Liveness.com

Biometric Liveness Detection Explained

What is "Liveness"?

In biometrics, Liveness Detection is a computer's ability to determine that it is interfacing with a physically present human being and not a spam bot, inanimate spoof artifact or injected video/data. Remember: It's not "Liveliness". Don't make that rookie mistake!

The History of Liveness

In 1950 Alan Turing (wiki) developed the famous "Turing Test." It measures a computer's ability to exhibit human-like behavior. Conversely, Liveness Detection is AI that determines if a computer is interacting with a live human.

| Turing c. 1928 |

The "Godmother of Liveness"Dorothy E. Denning (wiki) is a member of the National Cyber Security Hall of Fame and coined the term "Liveness" in her 2001 Information Security Magazine Article: It's "liveness," not secrecy, that counts. "...biometric prints need not be kept secret, but the validation process must check for liveness of the readings." Decades ahead of her time, Dorothy E. Denning's vision for Liveness Detection in biometric face verification could not have been more correct. |  Dorothy E. Denning |

How Liveness Verification Protects Us

Ms. Denning's 2D photo posted above is biometric data, and it is now cached on your computer. Is she somehow more vulnerable now that you have a copy of her photo? Not if her accounts are secured with strong 3D Liveness Detection because the photo won't fool 3D Liveness AI. Nor will a video, a copy of her driver license, passport, fingerprint, or iris. In fact, she must be physically present to access her accounts, so she need not worry about keeping her biometric data "secret."

3D Liveness Detection prevents spam bots and bad actors from using stolen photos, injected deepfake videos, life-like masks, or other spoofs to create or access online accounts. Liveness ensures only real humans can create and access accounts, and by doing so, Liveness checks solve some very serious problems. For example, Facebook had to delete 5.4 billion fake accounts in 2019 alone! Requiring proof of Liveness would have prevented these fakes from ever being created.

Level 1-5 Threat Vectors - Spoof Artifact & Bypass Types

When a non-living object that exhibits human traits (an "artifact") is presented to a camera or biometric sensor, it's called a "spoof." Photos, videos on screens, masks, and dolls are all common examples of spoof artifacts. When biometric data is tampered with post-capture, or the camera is bypassed altogether, that is called a "bypass." A deepfake puppet injected into the camera feed is an example of a bypass. While there are now NIST/NLVAP lab tests available for PAD Level 3, or Levels 4 & 5 bypasses, only an ongoing Spoof Bounty Program can ensure continued Level 1-5 security.

| Artifact Type | Description | Example |

|---|---|---|

Level 1 (A) | Hi-res paper & digital photos, hi-def challenge/response videos and paper masks. Beware: iBeta Lab Tests DO NOT include deepfake puppet attacks, but FaceTec's Spoof Bounty DOES include deepfake puppets. |  |

Level 2 (B) | Commercially available lifelike dolls, and human-worn resin, latex & silicone 3D masks under $300 in price. |  |

Level 3 (C) | Custom-made ultra-realistic 3D masks, wax heads, etc., up to $3,000 in creation cost. |  |

| Bypass Type | Description | Example |

|---|---|---|

Level 4 | Decrypt & edit the contents of a 3D FaceMap™ to contain synthetic data not collected from the session, have the Server process and respond with Liveness Success. |  |

Level 5 | Take over the camera feed & inject previously captured video frames or a deepfake puppet that results in the FaceTec AI responding with "Liveness Success." |  |

Spoofs & Zero-Day Exploits of Liveness Vendors

A Russian hacker has created warning videos showing how he can exploit weaknesses in Liveness and Remote ID Proofing software using free or very low-cost methods. The videos below explain how to use free tools like Spark AR, an Instagram filter creation app, to create head "movement" and/or simulate random color flashing.

Spoofing Facia with Generated.photos & MugLife (2024) | Spoofing Innovatrics with Generated.photos & FaceSwap (2022) |

Spoofing Sum & Substance with Spark AR & Photoshop (2021) | Spoofing Shufti Pro with Veriff.tools & Generated.photos (2021) |

These vendors continue to try to make their software more secure so these exact attacks may not work forever, or even at the time you are reading this, but when weak 2D Liveness is used, there always seem to be ways to beat the system.

These videos show the incredibly difficult challenges that Liveness Detection and ID Proofing vendors are up against in the real world, and they also show why these vendors don't have Spoof Bounty Programs like FaceTec's. The success of the techniques in these videos prove that very few vendors are truly up to the task, despite many having been handed iBeta "conformances".

Please note that the creator of these videos spent significant amounts of time attacking FaceTec's Spoof Bounty Program but has been unable to spoof or bypass the system, even with these techniques.

NIST 2023 PAD Tests Show 2D Liveness Can't Even Stop Basic Digital Photo Attacks

The NIST PAD Testing showed that no 2D Liveness Vendors were secure against even basic presentation attacks, let alone video injection attacks or other camera bypass attacks, which even NIST now acknowledges are the future of scalable attacks:

"As presentation attack methods evolve, attackers will also work to exploit weaknesses in the implementation after the sensor i.e., in the digital domain. These" injection attacks" feature a direct electronic introduction of a digital image or video. These are recently of particular concern in personal devices where a virtual camera is not readily distinguishable from the physical camera. This is a possibility in those scenarios where the capture cannot be trusted - for example in some mobile phones - because the integrity of the sensor/camera cannot be guaranteed."

42 Vendors participated, and ALL of their 2D Liveness Algos were spoofed multiple times. These poor results led to NIST stating, "Only a small percentage of developers could realistically claim to detect certain presentation attacks using software. Some developers' algorithms could catch two or three types, but none caught them all." —Mei Ngan, NIST computer scientist."

For Presentation Attack Type 1: Still Media: the BEST 2D Liveness Algo allowed a staggering 6.9% of spoofs to pass, and the worst allowed more than 37% of the spoofs to pass. Once a bad actor learns what type of spoof works 6-30% of the time they can tailor future attacks to be nearly 100% successful.

See NIST's Full Commentary Here - www.nist.gov/whats-wrong-picture-nist-face-analysis-program-helps-find-answers

See the Full Report Here - hnvlpubs.nist.gov/nistpubs/ir/2023/NIST.IR.8491.pdf

|

|

|

|

|  |

Liveness.com Lists Free 2D Liveness Detection Providers Below

FaceTec's 2D Liveness Checks are 100% Server-Side, and are ~98% accurate against random Level 1-3 Spoof Attacks (but not Level 4 & 5), so they are nowhere near as secure as 3D Liveness (+99.999% accurate) with a Device SDK. However, there are scenarios where 2D Liveness Checks add some security; for example, at a Customs Checkpoint in an airport, or at a semi-supervised retail store's self-checkout. In these scenarios a fraudster is unlikely to be able to use a deepfake image or bypass the camera to inject a recorded video so 2D Liveness can provide some value.

2D Liveness doesn't require a Device SDK or special user interface, and works on any mugshot-style 2D face photo. For FaceTec Customers FREE 2D Liveness checks are unlimited and the images processed 100% on the Customer's Server.

Free 2D Liveness Detection is provided to ALL FaceTec Customers & Partners, so you can contact ANY* Certified FaceTec Provider and ask them about Free 2D Liveness Detection, or visit the 2D Passive Liveness Check Developers page for more information. *Participation by FaceTec Distribution Partners may vary.

FaceTec Certified 3D Liveness Providers

3D Liveness Detection is much stronger than 2D, and to prove it, FaceTec created a $600,000 Spoof Bounty Program to rebuff Level 1,2 & 3 PAD attacks, and Level 4 & 5 Template Tampering, and Virtual-Camera & Video Injection Attacks.

Read FaceTec's 2017-2025 3rd-Party Testing Lab Report History here - FaceTec_3rd_Party_Testing_Report.pdf

Organizations have a fiduciary duty to provide the strongest Liveness Detection when they use remote onboarding, identity verification or face authentication. FaceTec's AI now performs over 3,700,000,000 3D Liveness Checks annually!

FaceTec Certified 3D Liveness Vendors

With security powered & proven by the $600,000 Spoof Bounty Program & NIST/NVLAP Lab Certified PAD: Level 1, 2, 3, 4 & 5 AI*

Incentivized public bypass testing for Template Tampering, Level 1-3 Presentation, Video Replay, Deepfake Injection, Virtual Camera, and MIPI /HDMI Adapter Attacks.

*Vendors listed above have not have been individually tested by a NVLAP/NIST accredited lab for Level 1, 2, & 3 Presentation Attacks, they are distributing FaceTec's software, which has been Certified to Pass Level 1-5 with ongoing regression testing.

Liveness for Onboarding, KYC, and Face Verification

Requiring every new user to prove their 3D Liveness before they are asked to present an ID Document during digital onboarding is in itself a huge deterrent to fraudsters who don't ever want their real faces on camera.

If an onboarding system has a weakness, bad actors will exploit it to create as many fake accounts as possible. To prevent this, strong Liveness Detection during new account onboarding should be required. Once it is proven that the new account belongs to a real human, their biometric data can be stored as a trusted reference for their digital identity in the future.

Liveness for Ongoing Face Re-Verification (Password/PKI Replacement)

Since most biometric attacks are spoof attempts or video injected using virtual camera software, strong 3D Liveness Detection during user authentication should be mandatory. With multiple high-quality photos of almost everyone available on Google or Facebook, a biometric authenticator cannot rely on secrecy for its security.

3D Liveness Detection is the first and most important line of defense against targeted spoof attacks on remote identity verification systems. The second line of defense is a high FAR (see Glossary, below), for accurate biometric matching.

With 3D Liveness Detection you can't even make a copy of your biometric data that would fool the system even if you wanted to. Liveness catches the copies by detecting generation loss, and only the genuine physical user can gain access.

ISO/IEC 30107-3 - Presentation Attack Detection Standard: Originally Published in 2017

www.iso.org/standard/67381 is the International Organization for Standardization's (ISO) testing guidance for evaluation of Anti-Spoofing technology, a.k.a. Presentation Attack Detection or "PAD". Four 30107 documents have been published to date, including ISO/IEC 30107-4:2020, the precursor to ISO/IEC WD 30107-4 currently in progress.

Released in 2017, ISO 30107-3 served as official guidance for determining if the subject of a biometric scan is alive. But since it allows PAD Checks to be compounded with Matching, it can produce confusing, invalid results. In 2020, with the introduction of deepfake puppets and other attack vectors not conceived of at the time of publication, ISO 30107-3 is now considered by many experts to be outdated and incomplete.

Due to "hill-climbing" attacks (see Glossary, bottom of page), biometric systems should never reveal which part of the system did or didn't "catch" a spoof. And, while ISO 30107-3 gets a lot right, it unfortunately does encourage testing both Liveness and Matching at the same time, where scientific method requires the fewest variables possible be tested at once. Liveness testing should be done with a solely Boolean (true/false) response, and Liveness testing should not allow systems to have multiple decision layers that could allow an artifact to pass Liveness, but fail Matching because it didn't "look" enough like the enrolled subject.

Why iBeta/Lab PAD Testing Isn't Enough...

"It Ain't What You Don't Know That Gets You Into Trouble. It's What You Think You Know That Just Ain't So." - Mark Twain

In our opinion, the iBeta PAD tests alone do not adequately represent the real-world threats a Liveness Detection System will face from hackers. Any 3rd-party testing is better than nothing, but taken at face value, iBeta tests provide a false sense of security due to being incomplete, too brief, having too much variation between vendors, and being much TOO EASY to pass.

Unfortunately, iBeta allows vendors to choose whatever device(s) they WANT TO USE for the test, and most choose a new model device with an 8-12MP camera. To put this in perspective, a 720p webcam is not even 1MP, and the higher the quality/resolution of the camera sensor, the easier the testing is to pass.

Even though most consumers and end users don't have access to the "pay-per-view" ISO 30107-3 Standard document iBeta refuses to add disclaimers in their Conformance Letters to warn customers & end-users that their PAD tests ONLY contain Presentation Attacks, and not attempts to bypass the camera/sensor. It is also unfortunate that ISO & iBeta both conflate Matching & Liveness into one unscientific testing protocol, making it impossible to know if the Liveness Detection is actually working as it should in scenarios where matching is included.

PAD only means that iBeta testing only considers artifacts physically shown to a sensor. And even though injected digital attacks are the most scalable. iBeta DOES NOT TEST for any type of Virtual Camera Attack, or Template Tampering as part of their PAD testing. So iBeta/Lab PAD testing, no matter what Level it is, is NEVER enough to ensure real-world security. As far as we know, iBeta has never offered any sensor bypass testing to any PAD vendor at any time before this writing.

iBeta indirectly allows vendors to influence the number of attacks in their time-based testing because some vendors have much longer session times than others. This means that by extending the time it takes for a session to be completed, the vendor can limit the amount of attacks that can be performed in the allotted time. The goal of biometric security testing is to expose vulnerabilities, and when the number of attacks, the devices, and the tester skill levels are non-standardized, it means the testing is NOT equally difficult between vendors, and/or isn't representative of real-world threats.

It's important to note that NO Level 3 testing is offered by iBeta any longer. It was offered for a few months under a "Level 3 Conformance," but then NIST notified iBeta that they didn't believe iBeta was capable of performing such important and difficult testing, and iBeta had to remove the Level 3 testing option. However, iBeta still listed Level 3 testing on their website for over a year. The editors of this site believe that the lack of a disclaimer stating "Level 3 cannot be tested by iBeta under its NIST accreditation" IS purposefully missing to attempt to make iBeta's testing menu look more complete and their lab more competent.

Note: iBeta staff have recently stated publicly that it was their "business" decision not to perform Level 3 Testing, but this is false. On phone calls and in emails, iBeta staff repeatedly told editors of this website that iBeta was not able to perform Level 3 testing due to NIST's limitations. *This is disputed by iBeta.

iBeta doesn't usually test the vendor's face Liveness Detection software in web browsers, only native smartphones, so numerous untested digital attack vectors exist even for systems that pass PAD testing. Another huge red flag in iBeta's testing is they allow as much as 15% BPCER (Bonafide presentation classification error rate), which we call False Reject Rate (or FRR), enabling unscrupulous vendors to tighten security thresholds just to pass the test, but later lower security in their real product when customers experience poor usability. It has been verified in real-world testing that at least two vendors who claim 0% Presentation Attack (PA) Success Rate in iBeta testing have, in independent testing, been found to have over 4% Presentation Attack (PA) Success Rates.

Note: iBeta DOES NOT require production version verification or require the vendor to sign an affidavit stating they will not lower security thresholds in production versions of their software.

Remember, robust Liveness Detection must cover all attack vectors, including digital attack vectors, so don't be fooled by an iBeta "Conformance" badge. While it's better than nothing, it's nowhere near enough. Make your vendor sign an affidavit saying they have not lowered security thresholds, demand they prove they have undergone Penetration Testing for Digital Attack Vectors, and demand the vendor stand up a Spoof Bounty Program before they can earn your business.

To read FaceTec's full Level 1-5 Testing History see - FaceTec_3rd_Party_Testing_Report.pdf

FaceTec's $600,000 Spoof Bounty Program

Insist that your biometrics vendor maintain a persistent Spoof Bounty Program to ensure they are aware of and robust to any emerging threats, like deepfakes, video injection, or virtual-camera hijacking. As of this writing, the only Biometric Vendor with an active Spoof Bounty is FaceTec. Having rebuffed over 150,000 spoof attacks, the $600,000 Spoof Bounty Program's goal remains to uncover unknown vulnerabilities in the FaceTec Liveness AI and Security scheme. If any are found, they are patched, and the security levels elevated even further. Visit bounty.facetec.com to participate.

No Stored Liveness Data = No Honeypot Risk

Two types of data are required for every User Authentication: Face Data (for matching) and Liveness Data (to prove the Face Data was collected from a live person).

Liveness Data must be timestamped, be valid only for a few minutes, and then deleted. Only Face Data should ever be stored. New Liveness Data must be collected for every authentication attempt.

Face Data should be encrypted and stored without the corresponding Liveness Data, so it does not create honeypot risk.

Note: Think of the stored Face Data as the lock, the User's newly collected Face Data as a One-Time-Use key, and the Liveness Data as proof that key has never been used before.

Early Academic Papers About Liveness & Anti-Spoofing

One of the earliest papers on Liveness was published by Stephanie Schuckers, Ph.D., in 2002. "Spoofing and anti-spoofing measures", and it is widely regarded as the foundation of today's academic body of work on the subject. The paper states that "Liveness detection is based on recognition of physiological information as signs of life from liveness information inherent to the biometric".

Later in 2016, her follow-up, "Presentations and Attacks, and Spoofs, Oh My", continued to influence presentation attack detection research and testing.

Ask the Editor

Ask The Editor: Is Facial Recognition the Same as Liveness & Facial Verification & Face Authentication?

No! And we need to start using the correct terminology if we want to stop confusing people about biometrics.

Facial Recognition is for surveillance. It's the 1-to-N matching of images captured with cameras the user doesn't control, like those in a casino or an airport. And it only provides "possible" matches for the surveilled person from face photos stored in an existing database.

Face Verification (1:1 Matching to a legal identity data source+Liveness), is commonly used in onboarding users to new accounts. The trusted data can come from an ID document, a Passport chip, or a Government photo database.

Face Authentication (1:1 Matching+Liveness), on the other hand, takes user-initiated data collected from a device they do control and confirms that user's identity for their own direct benefit, like, for example, secure account access.

They may share a resemblance and even overlap in some ways, but don't lump the two together. Like any powerful tech, this is a double-edged sword; Facial Recognition is a threat to privacy while Facial Verification is a huge win for it.

Ask The Editor: Should We Fear Centralized Face Matching?

Fear of biometric matching stems from the belief that centralized storage of biometric data creates a "honeypot" that, if breached, compromises the security of all other accounts that rely on that same biometric data.

Biometric detractors argue, "You can reset your password if stolen, but you can't reset your face." While this is true, it is a failure of imagination to stop there. We must ask, "What would make centralized biometric authentication safe?"

The answer is strong Liveness Detection backed by a public spoof bounty program, that requires the user to provide new Liveness data every time they login. With this AI in place, the biometric honeypot is no longer something to fear because the security doesn't rely on our biometric data being kept secret, it relies on it being provided by our living selves.

Learn more about how strong Liveness Makes Centralized Safe in this comprehensive FindBiometrics white paper.

Ask The Editor: Should Liveness Detection be required by law?

We believe legislation must be passed to make strong Liveness Detection mandatory if biometrics are used for Remote Identity Proofing in KYC/AML regulated industries. The reality is, all of our personal data has already been breached, so we can no longer trust Knowledge-Based Authentication (KBA). We must now turn our focus from maintaining databases full of "secrets" to securing attack surfaces. For the public good, current laws already require organic foods to be Certified and that every medical drug must be tested and approved. In turn, governments worldwide should require strong Liveness Detection be employed to protect the biometric security and sensitive personal information of every citizen. Liveness AI should be tested by labs informed with the latest ID Proofing Guidelines from ENISA.

Ask The Editor: Why doesn't 2D Face Matching work very well?

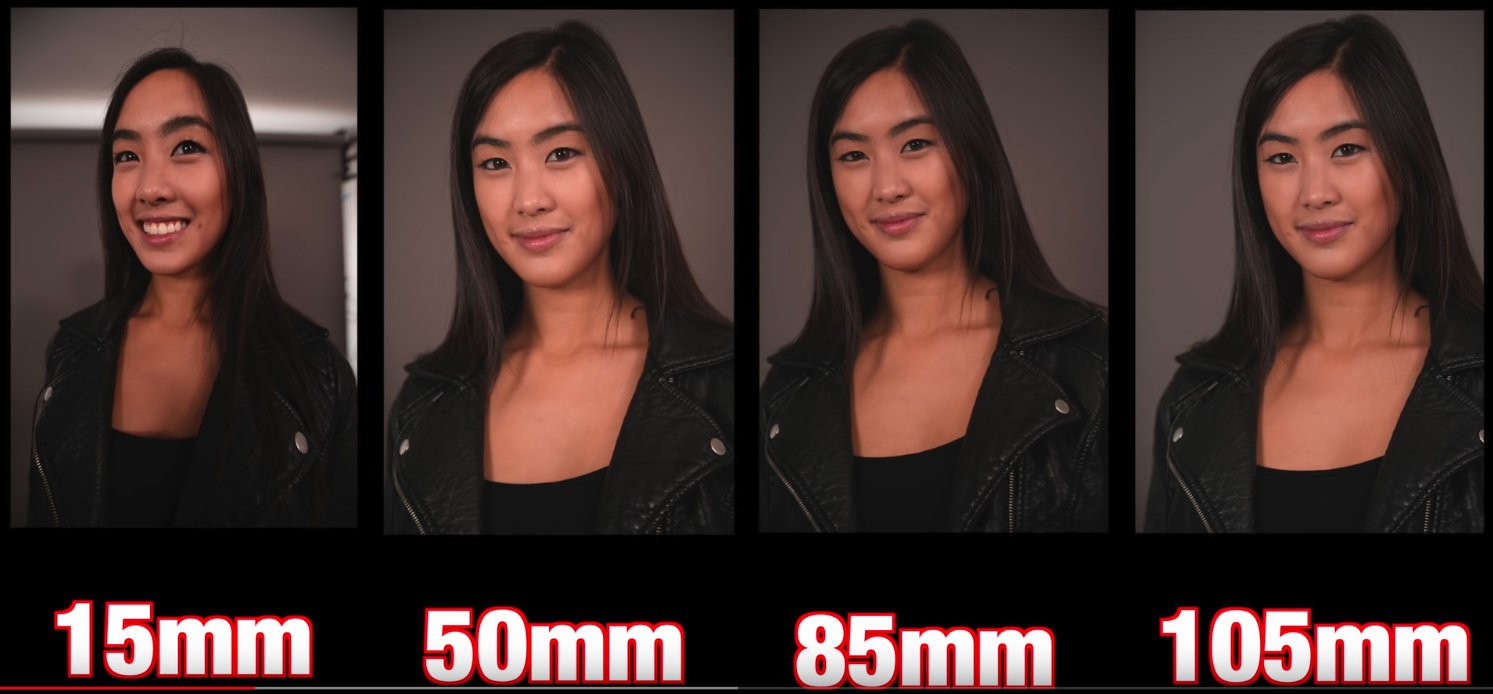

We've all heard an actor say, "get my good side", and the best photographers know which distances and lenses make portrait photos the most flattering. This is because a real 3D human face contains orders of magnitude more data than a typical 2D photo, and when a 3D face is flattened into a single 2D layer, depth data is lost and creates significant issues. In the real world, capture distance, camera position, and lens diameter play big parts in how well a derivative 2D photo represents the original 3D face.

Source – Best Portrait Lens – Focal Length, Perspective & Distortion Matt Granger – Oct 27, 2017

2D Face Matching will not always "see" her as the same person. In some frames she might look more like her sister or her cousin and she could match one of them even more highly than herself. In large datasets these visual differences are within the margin of error of the 2D algorithms and they make confidence in the 1:N match impossible. However, 3D FaceMaps not only provide more human signal for Liveness Detection, but they also provide data about the shape of the face which is combined with unique visual traits to increase accuracy, and enable the use of 1:N matching with significantly larger datasets.

FaceTec's current 1:N Search for De-duplication provides an Elastic-FAR of 1/125M to 1/+1B at only 2% FRR.

Ask The Editor: What's the Problem With Text & Photo CAPTCHAs?

CAPTCHA (wiki), an acronym for "Completely Automated Public Turing test to tell Computers and Humans Apart", is a simple challenge-response test used in computing to determine whether the user is human or a spam bot.

In an article on TheVerge.com, Josh Dzieza writes, "Google pitted one of its machine learning algorithms against humans in solving the most distorted text CAPTCHAs: the computer got the test right 99.8-percent of the time, while the humans got a mere 33 percent."

Jason Polakis, a computer scientist, used off-the-shelf image recognition tools, including Google's own image search, to solve Google's image CAPTCHA with 70% accuracy, states "You need something that's easy for an average human, it shouldn't be bound to a specific subgroup of people, and it should be hard for computers at the same time."

Even without AI, services like: deathbycaptcha.com and anti-captcha.com allow spam bots to bypass the challenge–responses tests by using proxy humans to complete them. With so many people willing to do this work it's cheap to defeat at scale, and workers earn between $0.25-$0.60 for every 1000 CAPTCHAs solved. (webemployed).

Resources & Whitepapers

European Union Agency for Cybersecurity (ENISA) - Remote Identity Proofing - Attacks & Countermeasures - Published

https://www.enisa.europa.eu/publications/remote-identity-proofing-attacks-countermeasures

Information Security Magazine - Dorothy E. Denning's (wiki) 2001 article, "It Is "Liveness," Not Secrecy, That Counts"

FaceTec: Liveness Detection - Biometrics Final Frontier & FaceTec Reply to NIST 800-63 RFI

Gartner, "Presentation attack detection (PAD, a.k.a., "liveness testing") is a key selection criterion. ISO/IEC 30107 "Information Technology — Biometric Presentation Attack Detection" was published in 2017.

(Gartner's Market Guide for User Authentication, Analysts: Ant Allan, David Mahdi, Published: 26 November 2018). FaceTec's ZoOm was cited in the report. For subscriber access: https://www.gartner.com/doc/3894073?ref=mrktg-srch.

Forrester, "The State Of Facial Recognition For Authentication - Expedites Critical Identity Processes For Consumers And Employees" By Andras Cser, Alexander Spiliotes, Merritt Maxim, with Stephanie Balaouras, Madeline Cyr, Peggy Dostie. For subscriber access: https://www.forrester.com/report/The+State+Of+Facial+Recognition+For+Authentication+And+Verification/-/E-RES141491

Ghiani, L., Yambay, D.A., Mura, V., Marcialis, G.L., Roli, F. and Schuckers, S.A., 2017. Review of the Fingerprint Liveness Detection (LivDet) competition series: 2009 to 2015. Image and Vision Computing, 58, pp.110-128:

https://www.clarkson.edu/sites/default/files/2017-11/Fingerprint%20Liveness%20Detection%2009-15.pdf

Schuckers, S., 2016. Presentations and attacks, and spoofs, oh my. Image and Vision Computing, 55, pp.26-30:

https://www.clarkson.edu/sites/default/files/2017-11/Presentations%20and%20Attacks.pdf

Schuckers, S.A., 2002. Spoofing and anti-spoofing measures. Information Security technical report, 7 (4), pp.56-62:

https://www.clarkson.edu/sites/default/files/2017-11/Spoofing%20and%20Anti-Spoofing%20Measures.pdf

FaceTec's Official Reply to NIST 800-63 RFI

https://facetec.com/NIST_800-63_RFI_FaceTec_Reply.pdf

FaceTec Discussion With ENISA on IDV Guidelines 2021

https://facetec.com/ENISA_RFI_Remote_ID_Attacks_FaceTec_Countermeasures.pdf

Glossary - Biometrics Industry & Testing Terms:

1:1 (1-to-1) – Comparing the biometric data from a subject User to the biometric data stored for the expected User. If the biometric data does not match above the chosen FAR level, the result is a failed match.

1:N (1-to-N) – Comparing the biometric data from one individual to the biometric data from a list of known individuals, the faces of the people on the list that look similar are returned. This is used for facial recognition surveillance, but can also be used to flag duplicate enrollments.

Artifact (Artefact) – An inanimate object that seeks to reproduce human biometric traits.

Authentication – The concurrent Liveness Detection, 3D depth detection, and biometric data verification (i.e., face matching) of the User.

Bad Actor – A criminal; a person with intentions to commit fraud by deceiving others.

Biometric – The measurement and comparison of data representing the unique physical traits of an individual for the purposes of identifying that individual based on those unique traits.

Certification – The testing of a system to verify its ability to meet or exceed a specified performance standard. iBeta used to issue certifications, but now they can only issue conformances.

Complicit User Fraud – When a User pretends to have fraud perpetrated against them, but has been involved in a scheme to defraud by stealing an asset and trying to get it replaced by an institution.

Cooperative User/Tester – When human Subjects used in the tests provide any and all biometric data that is requested. This helps to assess the complicit User fraud and phishing risk, but only applies if the test includes matching (not recommended).

Centralized Biometric – Biometric data is collected on any supported device, encrypted and sent to a server for Liveness and possibly enrollment and future authentication for that device or any other supported device. When the User's original biometric data is stored on a secure 3rd-party server, that data can continue to be used as the source of trust and their identity can be established and verified at any time. Any supported device can be used to collect and send biometric data to the server for comparison, enabling Users to access their accounts from all of their devices, new devices, etc., just like with passwords. Liveness is the most critical component of a centralized biometric system, and because certified Liveness did not exist until recently, centralized biometrics have not yet been widely deployed.

Credential Sharing – When two or more individuals do not keep their credentials secret and can access each others accounts. This can be done to subvert licensing fees or to trick an employer into paying for time not worked (also called "buddy punching").

Credential Stuffing – A cyberattack where stolen account credentials, usually comprising lists of usernames and/or email addresses and the corresponding passwords, are used to gain unauthorized user account access.

Decentralized Biometric – When biometric data is captured and stored on a single device and the data never leaves that device. Fingerprint readers in smartphones and Apple's Face ID are examples of decentralized biometrics. They only unlock one specific device, they require re-enrollment on any new device, and further do not prove the identity of the User whatsoever. Decentralized biometric systems can be defeated easily if a bad actor knows the device's override PIN number, allowing them to overwrite the User's biometric data with their own.

End User– An individual human who is using an application.

Enrollment – When biometric data is collected for the first time, encrypted and sent to the server. Note: Liveness must be verified and a 1:N check should be performed against all the other enrollments to check for duplicates.

Face Authentication – Authentication has three parts: Liveness Detection, 3D Depth Detection and Identity Verification. All must be done concurrently on the same face frames.

Face Matching – Newly captured images/biometric data of a person are compared to the enrolled (previously saved) biometric data of the expected User, determining if they are the same.

Face Recognition – Images/biometric data of a person are compared against a large list of known individuals to determine if they are the same person.

Face Verification – Matching the biometric data of the Subject User to the biometric data of the Expected User.

FAR (False Acceptance Rate) – The probability that the system will accept an imposter's biometric data as the correct User's data and incorrectly provide access to the imposter.

FIDO – Stands for Fast IDentity Online: A standards organization that provides guidance to organization that choose to use Decentralized Biometric Systems (https://fidoalliance.org).

FRR/FNMR/FMR – The probability that a system will reject the correct User when that User's biometric data is presented to the sensor. If the FRR is high, Users will be frustrated with the system because they are prevented from accessing their own accounts.

Hill-Climbing Attack – When an attacker uses information returned by the biometric authenticator (match level or liveness score) to learn how to curate their attacks and gain a higher probability of spoofing the system.

iBeta – A NIST/NVLAP-certified testing lab in Denver Colorado; the only lab currently certifying biometric systems for anti-spoofing/Liveness Detection to the ISO 30107-3 standard (ibeta.com).

Identity & Access Management (IAM) – A framework of policies and technologies to ensure only authorized users have the appropriate access to restricted technology resources, services, physical locations and accounts. Also called identity management (IdM).

Imposter – A living person with traits so similar to the Subject User that the system determines the biometric data is from the same person.

ISO 30107-3 – The International Organization for Standardization's testing guidance for evaluation of Anti-Spoofing technology (www.iso.org/standard/67381.html).

Knowledge-Based Authentication (KBA) - Authentication method that seeks to prove the identity of someone accessing a digital service. KBA requires knowing a user's private information to prove that the person requesting access is the owner of the digital identity. Static KBA is based on a pre-agreed set of shared secrets. Dynamic KBA is based on questions generated from additional personal information.

Liveness Detection or Liveness Verification – The ability for a biometric system to determine if data has been collected from a live human or an inanimate, non-living Artifact.

NIST – National Institute of Standards and Technology – The U.S. government agency that provides measurement science, standards, and technology to advance economic advantage in business and government (nist.gov).

Phishing – When a User is tricked into giving a Bad Actor their passwords, PII, credentials, or biometric data. Example: A User gets a phone call from a fake customer service agent and they request the User's password to a specific website.

PII – Personally Identifiable Information is information that can be used on its own or with other information to identify, contact, or locate a single person, or to identify an individual in context. Learn More.

Presentation Attack Detection (PAD) – A framework for detecting presentation attack events. Related to Liveness Detection and Anti-Spoofing.

Root Identity Provider – An organization that stores biometric data appended to the corresponding personal information of individuals, and allows other organizations to verify the identities of Subject Users by providing biometric data to the Root Identity Provider for comparison.

Selfie Matching - When a user provides their own biometric data to me compared to trusted data that they provided previously or is stored by any identity issuer. 2D Facial Recognition Algorithms are not well suited for Selfie Matching because 3D human faces are very different depending on the distance of the capture.

Spoof – When a non-living object that exhibits some biometric traits is presented to a camera or biometric sensor. Photos, masks or dolls are examples of Artifacts used in spoofs.

Subject User – The individual that is presenting their biometric data to the biometric sensor at that moment.

Synthetic Identity - When a bad actor uses a combination of biometric data, name, social security number, address, etc. to create a new record for a person who doesn't actually exist, for the purposes of opening and using an account in that name.

Editors & Contributors

Kevin Alan Tussy |

John Wojewidka |

Josh Rose |

All trademarks, logos and brand names are the property of their respective owners. All company, product and service names used on this website are for identification purposes only. Use of these names,trademarks and brands does not imply endorsement or denunciation.

© 2026, Liveness.com. All rights reserved.